Living Observatory Sensor Network

Sensor Node Mark I

The first version of the sensor node started development in 2013. It was originally intended to support a proof-of-concept demo of a cross-reality version of Tidmarsh, and integrated a microcontroller, 802.15.4 radio, and set of environmental sensors.

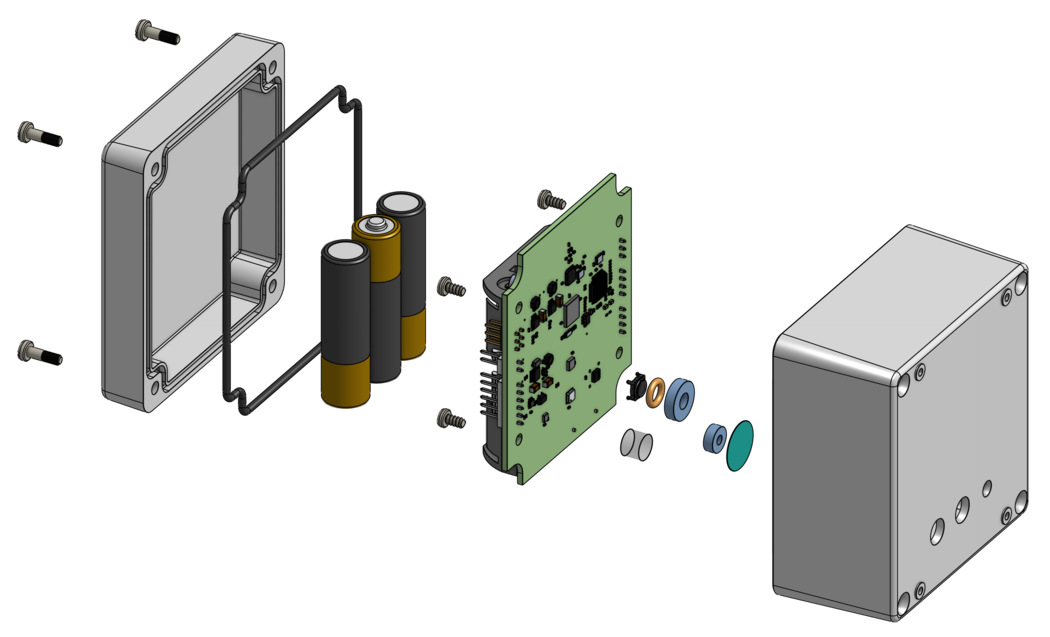

The first-generation sensor node takes its form from the Hammond RP1605 enclosure that houses the electronics. The enclosures were modified on the milling machine in the Media Lab shop to add apertures for the light, temperature/humidity, and pressure sensors.

- Microcontroller: Atmel ATXmega128A4U

- Radio: Atmel AT86RF231

The core system consists of an Atmel ATXmega128A4U 8-bit microcontroller and an Atmel AT86RF231 radio for communication. The microcontroller runs a custom real-time operating system to schedule reading from the sensors, assemble data packets, and transmit them via the low-power 802.15.4 wireless network. The network protocol is adapted from Atmel Lightweight Mesh.

The sensor node was designed to be powered from three AA batteries, which under normal operation can provide several years of life before replacement.

A solar charger was also included on the board so that a few nodes in the network could have external solar panels. These rechargeable nodes could serve as network repeaters (extending the range of the mesh) or power external sensors such as an anemometer for wind speed or soil moisture probes.

- Temperature/Humidity: Sensirion SHT2x

- Atmospheric Pressure: Bosch BMP180

- Ambient Light: Intersil ISL29023

- Accelerometer: Analog Devices ADXL362

These features were planned and partially tested, but ultimately were not used in the final deployment.

One of the ideas from the conceptual stages of the project was to hang sensor nodes in trees, and use an on-board accelerometer to detect the swaying of the tree (and the sensor node, depending on how it was mounted) to infer wind speed.

We did some experimentation with a node hanging in a tree outside the Media Lab.

Audio streaming was an important part of our vision for the sensor network. In addition to our experiments in using high-quality wired microphone installations to livestream and capture the restoration process, we also envisioned that the low-power sensor nodes might also be able to stream audio. To this end, a VS1063a audio DSP/codec was included in the design. This chip has a built-in microphone preamplifier and outputs compressed audio in MP3 and Ogg Vorbis formats.

The 802.15.4 network protocol used by the sensor nodes has a maximum bandwidth of 250kbps. A medium-quality mono audio stream can be transmitted in about 64kbps, so in theory the network can support a few audio streams (keeping in mind that protocol overhead and interference mean that the theoretical maximum bandwidth is never achievable). The idea was that nodes could selectively turn on their microphones a few at a time.

The audio codec was tested in the lab and the network was successfully able to support a single audio stream. However, the heavy load on the network and the power consumption of the codec (especially given that most of the sensor nodes had no means for recharging their batteries) made it impractical. Ultimately, we decided to focus on the wired microphones for audio streaming and the audio codec chip was not installed on the production run of sensors.